NaturalSpeech 3:

Zero-Shot Speech Synthesis with Factorized Codec and Diffusion Models

[Paper]Zeqian Ju1,2*, Yuancheng Wang3*, Kai Shen4,1*, Xu Tan1*, Detai Xin1,5, Dongchao Yang1, Yanqing Liu1, Yichong Leng1, Kaitao Song1, Siliang Tang4, Zhizheng Wu3, Tao Qin1,

Xiang-Yang Li2, Wei Ye6, Shikun Zhang6, Jiang Bian1, Lei He1, Jinyu Li1, Sheng Zhao1

1Microsoft Research Asia & Microsoft Azure Speech 2University of Science and Technology of China

3The Chinese University of Hong Kong, Shenzhen 4Zhejiang University,

5The University of Tokyo, 6Peking University

Abstract.

While recent large-scale text-to-speech (TTS) models have achieved significant progress, they still fall short in speech quality, similarity, and prosody. Considering speech intricately encompasses various attributes (e.g., content, prosody, timbre, and acoustic details) that pose significant challenges for generation, a natural idea is to factorize speech into individual subspaces representing different attributes and generate them individually. Motivated by it, we propose NaturalSpeech 3, a TTS system with novel factorized diffusion models to generate natural speech in a zero-shot way. Specifically, 1) we design a neural codec with factorized vector quantization (FVQ) to disentangle speech waveform into subspaces of content, prosody, timbre, and acoustic details; 2) we propose a factorized diffusion model to generate attributes in each subspace following its corresponding prompt. With this factorization design, NaturalSpeech 3 can effectively and efficiently model the intricate speech with disentangled subspaces in a divide-and-conquer way. Experiments show that NaturalSpeech 3 outperforms the state-of-the-art TTS systems on quality, similarity, prosody, and intelligibility. Furthermore, we achieve better performance by scaling to 1B parameters and 200K hours of training data.

Update: We release the code and pre-trained checkpoint of FACodec at here !

This research is done in alignment with Microsoft's responsible AI principles.

This page is for research demonstration purposes only.

Overview

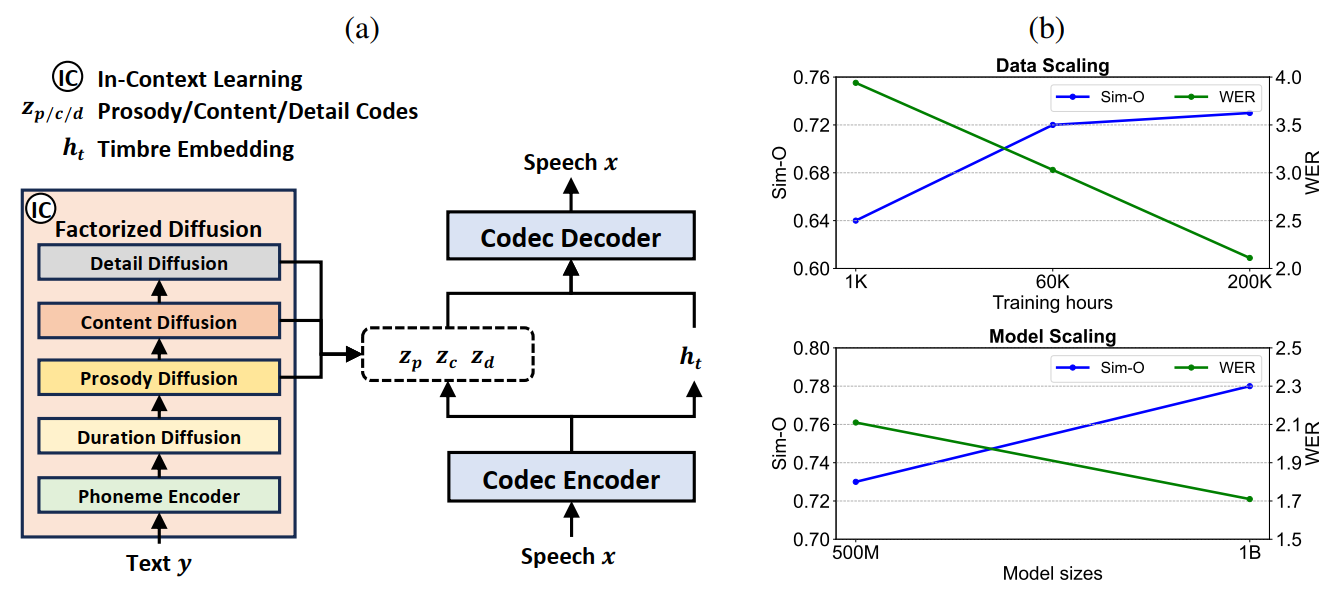

(a) Overview of NaturalSpeech 3, with a neural speech codec for speech attribute factorization and a factorized diffusion model. (b) Data and model scaling of NaturalSpeech 3 on an internal test set.

Zero-Shot TTS Samples (Emotion)

| Prompt Emotion | Prompt | NaturalSpeech 3 |

|---|---|---|

| neutral | ||

| happy | ||

| calm | ||

| sad | ||

| angry | ||

| fearful | ||

| disgust | ||

| surprised |

Zero-Shot TTS Samples

| Text | Prompt | NaturalSpeech 3 |

|---|---|---|

| They think you're proud because you've been away to school or something. | ||

| There was an average cost per lamp for meter operation of twenty two cents a year, and each meter took care of an average of seventeen lamps. | ||

| For the past ten years, Conseil had gone with me wherever science beckoned. | ||

| There were only four stationers of any consequences in the town, and at each Holmes produced his pencil chips, and bid high for a duplicate. |

Attribute Manipulation

Duration manipulation.| Duration Prompt | Other Prompts | NaturalSpeech 3 |

|---|---|---|

Original prompt. |

||

0.7x speed. |

||

1.3x speed. |

||

New prompt with a fast speech rate. |

Prosody manipulation.

| Prosody Prompt | Other Prompts | NaturalSpeech 3 |

|---|---|---|

Original prompt. |

||

New prompt in sad emotion. |

Timbre manipulation.

| Timbre Prompt | Other Prompts | NaturalSpeech 3 |

|---|---|---|

Original prompt. |

||

New prompt from a different speaker. |

Comparision Results on LibriSpeech Benchmark

| - | Sim-O↑ | Sim-R↑ | WER↓ | CMOS↑ | SMOS↑ |

|---|---|---|---|---|---|

| Ground Truth | 0.68 | - | 1.94 | +0.08 | 3.85 |

| NaturalSpeech 2 | 0.55 | 0.62 | 1.94 | -0.18 | 3.65 |

| Voicebox | 0.64 | 0.67 | 2.03 | -0.23 | 3.69 |

| Voicebox (R) | 0.48 | 0.50 | 2.14 | -0.32 | 3.52 |

| VALL-E (P) | - | 0.58 | 5.90 | - | - |

| VALL-E (R) | 0.47 | 0.51 | 6.11 | -0.60 | 3.46 |

| Mega-TTS 2 | 0.53 | - | 2.32 | -0.20 | 3.63 |

| UniAudio | 0.57 | 0.68 | 2.49 | -0.25 | 3.71 |

| StyleTTS 2 | 0.38 | - | 2.49 | -0.21 | 3.07 |

| HierSpeech++ | 0.51 | - | 6.33 | -0.41 | 3.50 |

| NaturalSpeech 3 | 0.67 | 0.76 | 1.81 | 0.00 | 4.01 |

| Text | Prompt | Ground Truth | NaturalSpeech 3 | NaturalSpeech 2 | Voicebox | Voicebox (R) | VALL-E (R) | Mega-TTS 2 | UniAudio | StyleTTS 2 | HierSpeech++ |

|---|---|---|---|---|---|---|---|---|---|---|---|

| It is this that is of interest to theory of knowledge. | Sim-O: 0.73 | Sim-O: 0.65 | Sim-O: 0.71 | Sim-O: 0.49 | Sim-O: 0.55 | Sim-O: 0.55 | Sim-O: 0.62 | Sim-O: 0.39 | Sim-O: 0.59 | ||

| For, like as not, they must have thought him a prince when they saw his fine cap. | Sim-O: 0.73 | Sim-O: 0.43 | Sim-O: 0.62 | Sim-O: 0.54 | Sim-O: 0.45 | Sim-O: 0.42 | Sim-O: 0.47 | Sim-O: 0.40 | Sim-O: 46 | ||

| What you had best do, my child, is to keep it and pray to it that since it was a witness to your undoing, it will deign to vindicate your cause by its righteous judgment. | Sim-O: 0.69 | Sim-O: 0.66 | Sim-O: 0.59 | Sim-O: 0.59 | Sim-O: 0.61 | Sim-O: 0.46 | Sim-O: 0.53 | Sim-O: 0.52 | Sim-O: 0.49 | ||

| The strong position held by the Edison system under the strenuous competition that was already springing up was enormously improved by the introduction of the three wire system and it gave an immediate impetus to incandescent lighting. | Sim-O: 0.81 | Sim-O: 0.64 | Sim-O: 0.67 | Sim-O: 0.65 | Sim-O: 0.65 | Sim-O: 0.60 | Sim-O: 0.45 | Sim-O: 0.44 | Sim-O: 0.55 |

Comparision Results on Ravdess Benchmark

| - | average MCD↓ | MCD-Acc↑ | CMOS↑ | SMOS↑ |

|---|---|---|---|---|

| Ground Truth | 0.00 | 1.00 | +0.17 | 4.42 |

| NaturalSpeech 2 | 4.56 | 0.25 | -0.22 | 4.04 |

| Voicebox (R) | 4.88 | 0.34 | -0.34 | 3.92 |

| VALL-E (R) | 5.03 | 0.34 | -0.55 | 3.80 |

| Mega-TTS 2 | 4.44 | 0.39 | -0.20 | 4.51 |

| StyleTTS 2 | 4.50 | 0.40 | -0.25 | 3.98 |

| HierSpeech++ | 6.08 | 0.30 | -0.37 | 3.87 |

| NaturalSpeech 3 | 4.28 | 0.52 | 0.00 | 4.72 |

| Prompt Emotion | Prompt | Ground Truth | NaturalSpeech 3 | NaturalSpeech 2 | Voicebox (R) | VALL-E (R) | Mega-TTS 2 | StyleTTS 2 | HierSpeech++ |

|---|---|---|---|---|---|---|---|---|---|

| neutral | |||||||||

| happy | |||||||||

| calm | |||||||||

| sad | |||||||||

| angry | |||||||||

| fearful | |||||||||

| disgust | |||||||||

| surprised |

FACodec: Reconstruction Samples

| Ground Truth | FACodec 4.8 kbps | SoundStream (R) 4.8 kbps | SoundStream (R) 9.6 kbps | Encodec 6.0 kbps | Encodec (R) 5.0 kbps | HiFi-Codec 2.0 kbps | DAC 4.5 kbps |

|---|---|---|---|---|---|---|---|

FACodec: Voice Conversion Samples

| Prompt | Source | FACodec |

|---|---|---|

Ethics Statement

Since our model could synthesize speech with great speaker similarity, it may carry potential risks in misuse of the model, such as spoofing voice identification or impersonating a specific speaker. We conducted the experiments under the assumption that the user agree to be the target speaker in speech synthesis. To prevent misuse, it is crucial to develop a robust synthesized speech detection model and establish a system for individuals to report any suspected misuse.